Shadow AI refers to AI applications and tools used without the clear knowledge or control of an organization’s IT department. The concept evolved from ‘Shadow IT,’ which refers to IT systems and solutions not managed or approved by the corporate IT department. However, Shadow AI poses potentially greater risks due to AI systems’ inherent complexity and unpredictability.

A survey of over 14,000 workers across 14 countries reveals that many generative AI users are using the technology without proper training, guidance, or employer approval. ~ Salesforce

The rise of AI has shaken the entire tech industry, including the enterprise cyber security industry, as businesses now have to deal with shadow AI.

Also read “How to Protect Your Website from Subdomain Takeovers?”

What is Shadow AI And Why is it Dangerous?

The term ‘Shadow AI’ refers to any unauthorized use of AI within an organization, leaving the company open to potential exploitation or other issues. It is often overlooked, but its undetectable nature raises concerns about its potential dangers.

Shadow AI influences outcomes like social media algorithms, personalized recommendations, and targeted advertising. Unlike traditional AI, Shadow AI operates in the background by influencing decisions and recommendations without user awareness.

Shadow AI is a practice where the staff of an organization installs AI tools on their work systems to improve efficiency and productivity, often without management’s knowledge. AI users may not be aware that their prompts will be recorded by the company, which can expose sensitive company data.

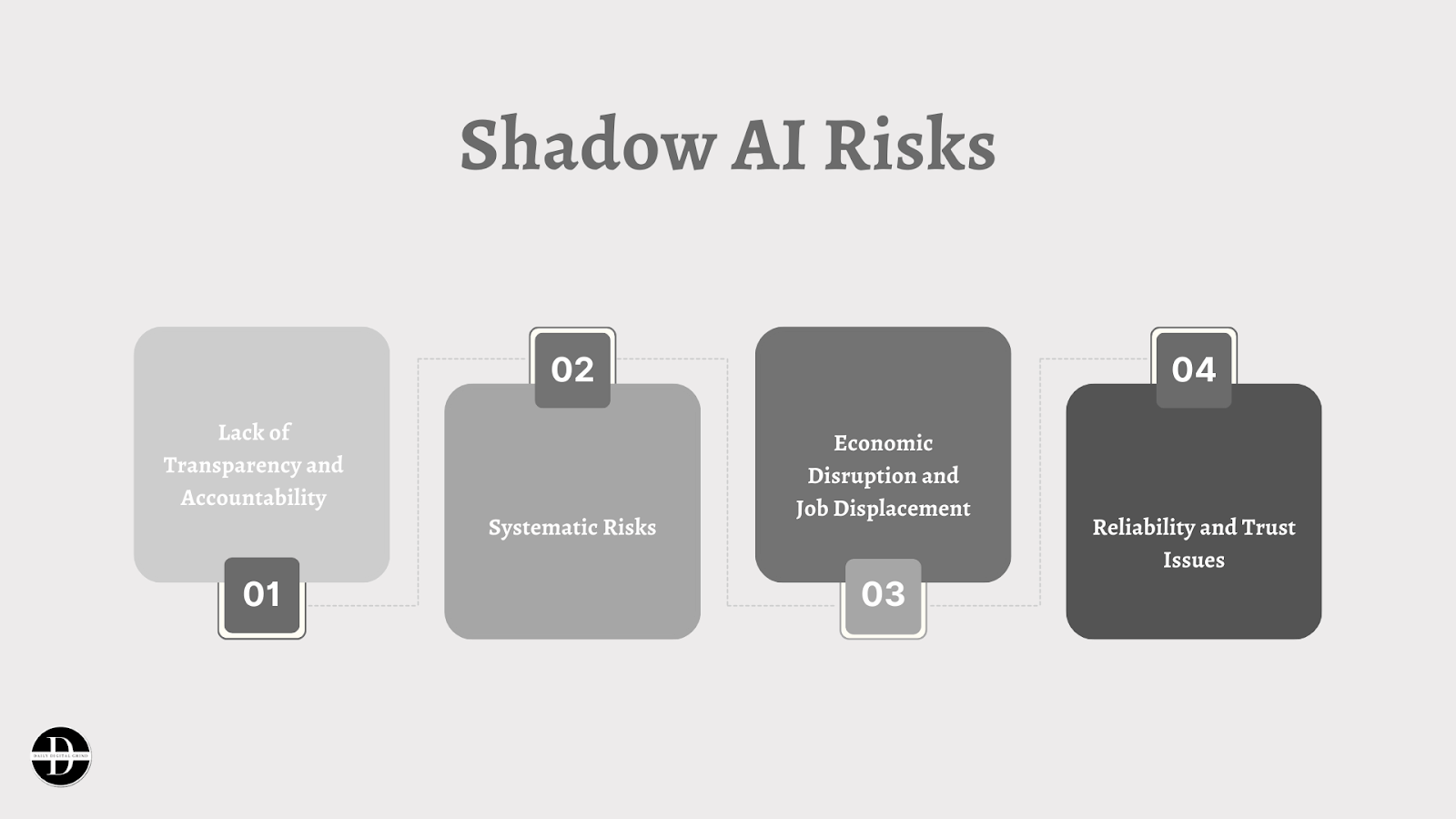

Shadow AI Risks

Shadow AI poses numerous risks and challenges that require a lot of attention.

Here are some of them:

1. Lack of Transparency and Accountability

The lack of transparency and accountability is a serious Shadow AI risk, as users are left in a state of confusion about the algorithms and their decisions. It affects confidence and prevents decision-making. Without proper documentation and approval processes, there are limited options for addressing errors, biases, or ethical violations that may arise from Shadow AI systems.

2. Systematic Risks

The rise of Shadow AI creates a huge threat in the form of systemic risks, which refers to the ability of interconnected systems to spread failures across the digital world. This causes disruption and harm. This can have major consequences for core fields like finance, healthcare, transportation, and infrastructure.

3. Economic Disruption and Job Displacement

According to the IMF, AI will affect 40% of jobs around the world. Shadow AI has the potential to transform industries and reshape labor markets, with serious socioeconomic effects. It could render certain jobs irrelevant, especially in industries that rely on repetitive tasks.

The rapid growth of AI-driven automation may exceed workers’ ability to adapt, which causes unemployment and income inequality. Displaced workers may face financial hardship, social isolation, and poor health.

4. Data Privacy Risks

Illegal AI models may lack security measures making data vulnerable to breaches or misuse. Shadow AI users may unintentionally leak private user data and intellectual property when interacting with AI models.

AI models can be trained on user interactions, and make user-provided data accessible to third parties without non-compete agreements. Confidential data could end up in the hands of criminals for inappropriate use.

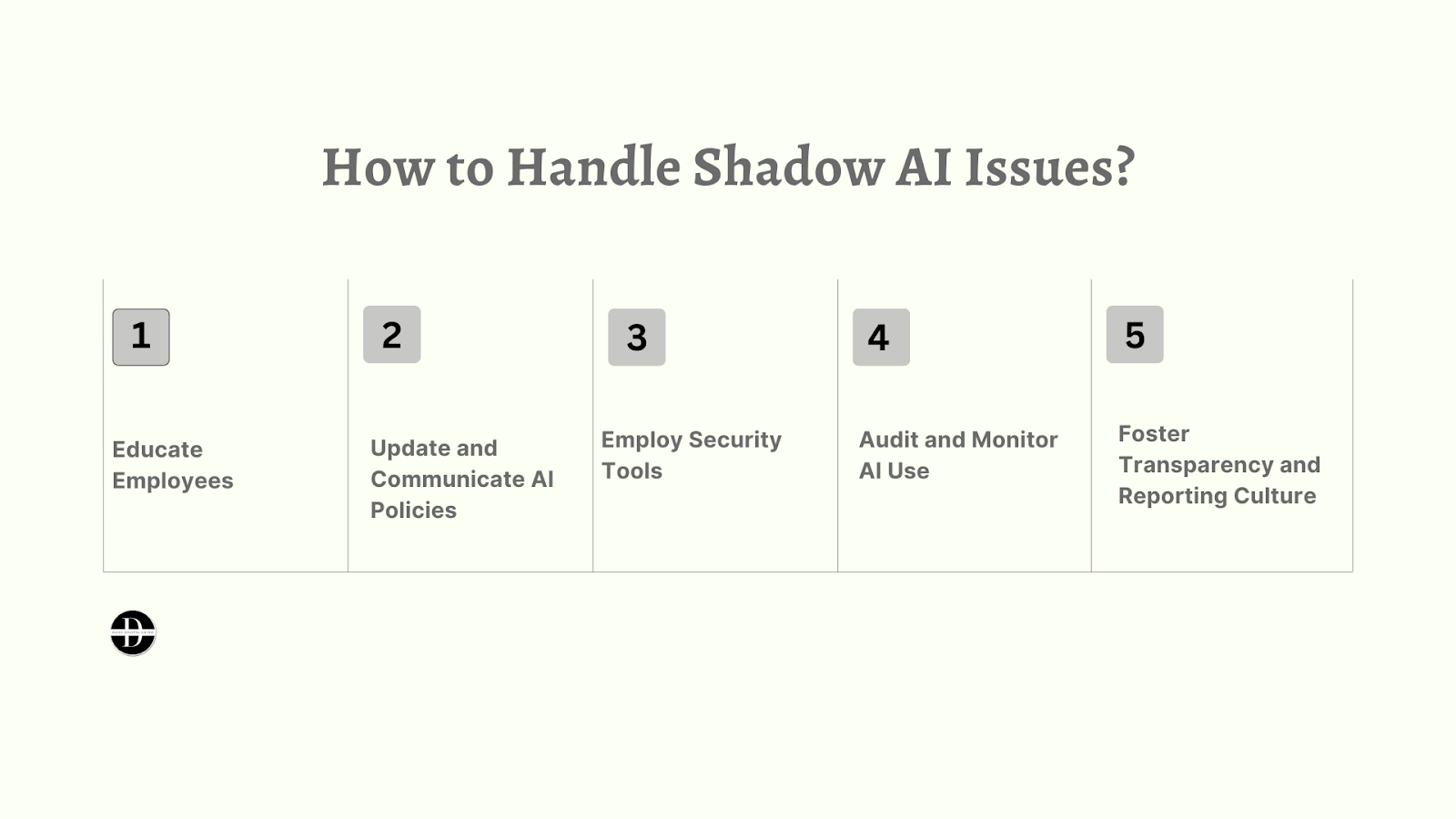

How to Handle Shadow AI Issues?

To tackle Shadow AI problems, organizations need to take an in-depth strategy that involves technology, regulations, and society.

Also read “5 Best AI Assistants for 2024.”

1. Educate Employees

Training programs should cover various aspects of Shadow AI, including its definition, applications, benefits, and risks. Employees should know how they may influence decision-making processes and how to identify biases or ethical concerns. They should also understand their responsibilities regarding data privacy and security.

2. Update and Communicate AI Policies

Organizations must regularly update and communicate AI policies to manage risks associated with Shadow AI and ensure alignment with ethical standards and regulatory requirements. These policies should cover areas such as data privacy, algorithmic transparency, bias mitigation, security measures, and ethical considerations.

As AI technologies evolve, new challenges emerge, so organizations must regularly review and revise their policies.

3. Employ Security Tools

Organizations must implement strong security tools to protect against risks associated with Shadow AI. These tools serve as defense mechanisms against security threats, data breaches, and unauthorized access to sensitive information. They can employ detection systems, encryption techniques, access controls, and authentication mechanisms to protect AI systems.

Adopting endpoint security tools such as Cloud Access Security Brokers (CASB), can help against the greatest risk with remote users and cloud-based AI platforms.

4. Audit and Monitor AI Use

Regular audits and monitoring of AI use are necessary to verify policy compliance, detect risks, and maintain the integrity and effectiveness of AI systems. Auditing involves systematically reviewing AI systems, processes, and data to determine performance.

To effectively audit and monitor AI use, organizations should establish clear audit trails, logging mechanisms, and monitoring processes. Responsible AI requires continuous monitoring and improvement.

Read also: How AI is Transforming Patient Care in the Healthcare Industry?

5. Foster Transparency and Reporting Culture

Implement an open culture where employees can report the unauthorized use of AI tools or systems without fear of retaliation. It promotes transparency and reduces the impact of incidents.

As AI technologies expand rapidly, it is necessary to resolve the risks created by these technologies. Strategies such as transparency, regulatory oversight, algorithmic audits, user empowerment, data privacy, security measures, continuous monitoring, and public dialogue can help organizations handle Shadow AI issues effectively.

Investing in employee education, security tools, auditing, and fostering transparency can help build trust and reduce the risks associated with Shadow AI. By adopting a holistic approach, organizations can benefit from the transformative potential of AI technologies.

Visit Daily Digital Grind to explore more articles related to Artificial Intelligence.

FAQs

Where can Shadow AI be found?

Shadow AI refers to AI tools used within an organization without approval or oversight, such as chatbots for customer interactions or analytics tools for financial teams. This can lead to data breaches and skewed business decisions, regardless of the intention.

What are the risks associated with Shadow AI?

Shadow AI poses risks such as lack of transparency, amplitude of bias, security and privacy vulnerabilities, and systemic risks.

How can users protect themselves from the negative effects of Shadow AI?

To protect against Shadow AI’s negative impacts, users should stay informed, and cautious, advocate for transparency, and make informed decisions about their interactions with AI systems.