As the use of AI continues to grow, the need for Responsible AI surfaces to address and mitigate the risks stemming from AI hallucinations and malicious human intent. Responsible AI is a way to deal with creating and conveying artificial intelligence from both a moral and legitimate perspective.

The objective of Responsible AI is to utilize AI in a secure, reliable, and ethical way. Using AI mindfully helps build straightforwardness and assists with mitigating issues, for example, AI bias.

AI, when used responsibly, can be beneficial for society by enhancing efficiency, adaptation, and augmentation. A research paper found that 79 percent of the 134 targets in the United Nations’ Agenda for Sustainable Development could be significantly aided by AI, particularly in the economy and environment, despite the ethical and legal implications. This suggests that AI can be used to achieve real good in the world.

Let’s explore how Responsible AI can help ensure the responsible use of AI.

Why Responsible AI is Important?

Irresponsible AI systems can reinforce existing inequalities, creating a loop of biased data and unfair results. For instance, an AI hiring tool discriminated against women in resume filtering.

Deployment failures can also occur when AI models are deployed poorly, leading to misunderstandings and misuse. This can occur due to training models on degraded data opaque or black-box models, or making decisions with the wrong model.

AI technologies have the potential to advance society, but they must be used responsibly.

Widespread use of AI requires a mindful approach to prevent inherent biases. In a special address at the World Economic Forum, President von Der Leyen highlighted the significant opportunity of AI, emphasizing its responsible use. She said,

“AI is a very significant opportunity – if used in a responsible way,”

Monitoring negative consequences, the AI Incident Database and organizations like the Algorithmic Justice League also stress the need for Responsible AI deployment.

Microsoft Framework for Building Responsible AI Systems

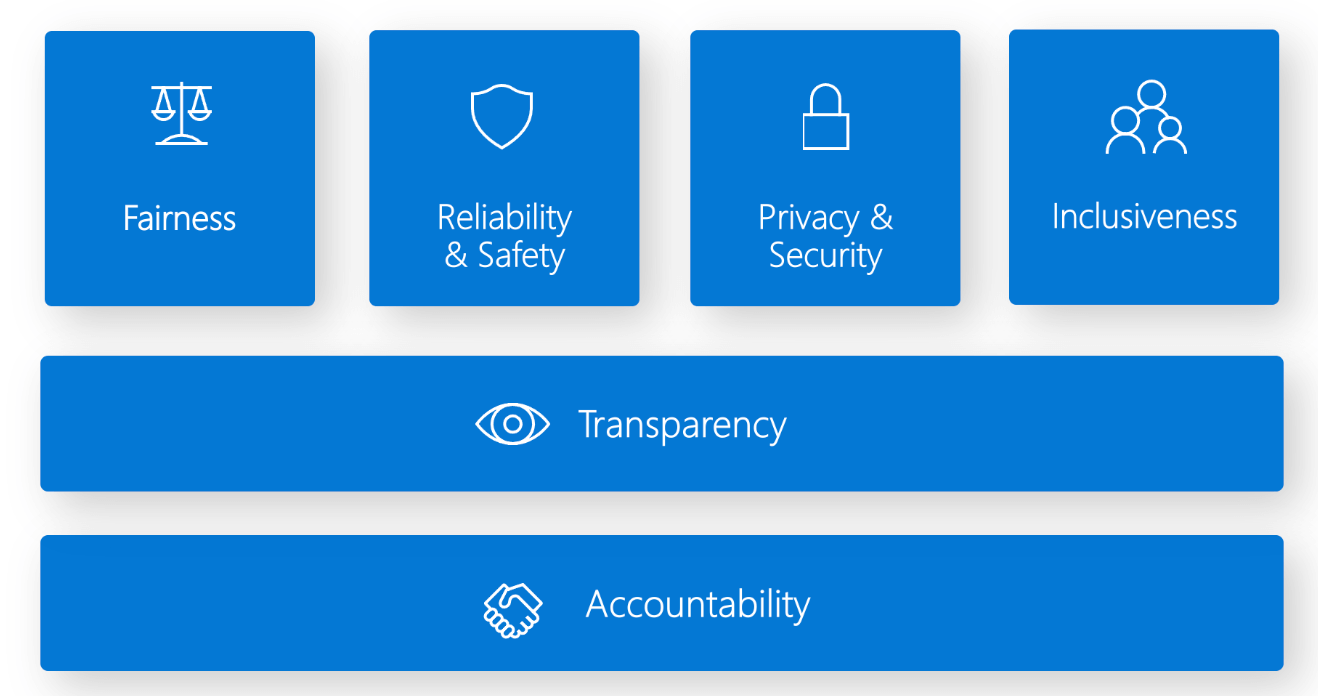

Microsoft has developed a framework to enable the responsible use of AI according to 6 principles.

Let’s explore how Azure Machine Learning provides tools for data scientists and developers to implement and operationalize these principles.

Read also: 5 Best AI Image Generator Tools to Use

1. Fairness & Inclusiveness

AI systems should treat everyone fairly, avoiding negative effects on similar groups. Azure Machine Learning’s fairness assessment component assesses model fairness across sensitive groups like gender, ethnicity, and age, ensuring inclusivity and fairness.

2. Reliability & Safety

AI systems should operate reliably, safely, and consistently to build trust. Azure ML error analysis component helps developers understand model failure distribution and identify data cohorts with higher error rates. This helps identify discrepancies due to underperformance for specific demographic groups or infrequent input conditions in training data.

3. Transparency

AI systems play a crucial role in decision-making, impacting people’s lives. Azure ML provides model interpretability and counterfactual what-if components for human-understandable predictions. It also offers a Responsible AI scorecard for developers to educate stakeholders, achieve compliance, build trust, and use in audit reviews.

4. Privacy & Security

As AI becomes more prevalent, protecting privacy and data security is crucial for accurate predictions and decision-making. Compliance with privacy laws and consumer controls is essential. Azure ML allows administrators and developers to create secure configurations, restrict access, and enforce security measures like encryption, vulnerability scanning, and policy auditing.

5. Accountability

AI system designers and developers must be accountable for their systems’ operations, ensuring they are not the final authority on decisions affecting people’s lives. Azure ML enhances accountability for AI system designers and developers through model registration, deployment, governance data capture, and monitoring, fostering trust and providing stakeholder insights.

Google Unveils Gemini: A Breakthrough in AI Capabilities

Takeaway

Responsible AI aims to create and deliver AI ethically and responsibly, ensuring its use in a safe, reliable, and moral manner. It aims to build transparency and reduce issues. The implementation of Responsible guidelines depends on the attentiveness of data analysts and software developers, as the measures needed to prevent segregation and ensure transparency may vary between organizations.

Responsible AI governance processes should be systematic and repeatable. To navigate potential issues, a crucial step involves examining industry best practices and ethical considerations. By doing so, we can not only leverage AI’s benefits but also ensure its alignment with societal values.

Find out more about AI and its advancements on our website, Daily Digital Grind.

FAQs

How can AI be used responsibly?

To use AI responsibly, assess the underlying result, check realities, figures, statements, and data utilizing dependable sources, alter prompts, and change results to mirror your interesting requirements and style. AI results ought to be a beginning stage, yet not the eventual outcome. Be straightforward about the use of AI and guarantee that it addresses the planned reason and your issues.

What are the four key principles of responsible AI?

The four key principles of Responsible AI include accountability, empathy, transparency, and fairness.

What is the responsible AI standard?

Responsible AI involves deploying, designing, and developing AI with good intentions to empower employees, businesses, and society, fostering trust and confidence in its scaling.